Quantitative Trading

Quantitative investment and trading ideas, research, and analysis.

Thursday, November 27, 2025

Deep Latent Variable Models

Monday, October 27, 2025

Features Selection in the Age of Generative AI

Friday, October 03, 2025

Book and Workshop Introduction: Generative AI for Trading & Asset Management

By Hamlet Medina & Ernest Chan

***

A Weekend with Ernie Chan in London : Trading with GenAI

Imperial College London, United Kingdom

Nov 22, 2025, 9:00 AM - Nov 23, 2025, 5:00 PM

***

The world of finance is no stranger to artificial intelligence. Most quantitative asset managers are already familiar with discriminative models, for example, given yesterday’s return, what is the probability that today’s return will be positive? Many are also familiar with reinforcement learning, used for tasks like optimizing order execution or figuring out how to set the best capital allocations within a portfolio to get a better Sharpe ratio.

But now there’s a new wave of AI, Generative AI, or GenAI for short, that’s making headlines and even filling up your social media feeds.

In the news, GenAI is usually associated with large language models (LLMs) like ChatGPT, or with image generation tools like MidJourney, essentially, machines that can learn from text or images and generate text or images. But in reality, these models can learn from many different types of data. In particular, they can learn from time series of asset returns, which is perhaps the most relevant for asset managers.

In our book (Check out Generative AI for Trading and Asset Management 1st Edition on Amazon), we explore both the practical applications and the fundamental principles of GenAI, with a special focus on how these technologies apply to trading and asset management.

A Book for Foundations and Practitioners

The book is divided into two broad parts:

Part 1, written by Ernie, starts with no-code applications of GenAI for traders and asset managers with little or no coding experience. After that, it takes readers on a whirlwind tour of machine learning techniques commonly used in finance.

Part 2, written by Hamlet, covers the fundamentals and technical details of GenAI, from modeling to efficient inference. This part is for those who want to understand the inner workings of these models and how to adapt them to their own custom data and applications. It’s for anyone who wants to go beyond the high-level use cases, get their hands dirty, and apply, and eventually improve these models in real-world practical applications.

Readers can start with whichever part they want to explore and learn from.

Part 1. No-Code GenAI for Traders and Asset Managers

In the first two chapters of Part 1, we show practical, hands-on examples of how GenAI can assist traders and asset managers with tasks they deal with all the time. Things like:

Retrieving adjusted price data from public sources

Computing basic performance metrics

Converting trading strategy code from one language to another, for example, from Matlab to Python (we use examples from Ernie’s Machine Trading book)

Generating Python code based on plain-language descriptions of a strategy

Summarizing research papers and turning the key ideas into code

What we will not show you is this:

“ChatGPT, give me a strategy that will make me millions so I can deploy it right now and quit my day job.”

At this stage, GenAI does not provide reliable fully automated alpha generation. Still, GenAI can assist with the strategy development process. We demonstrate examples where GenAI helped draft and understand:

A long-short factor strategy

A VIX futures carry strategy

A SPX options calendar spread

Literature searches on portfolio optimization using deep reinforcement learning

All these tasks were done using familiar tools like ChatGPT GPT-4o and Microsoft’s Copilot. Please, keep in mind that these models are evolving quickly. We tested these examples in early 2024. If you are curious how newer versions of LLMs perform on the same tasks, Ernie did a follow-up in April 2025. You can check it out on our Substack: Have LLMs improved over the last year?

This part of the book is designed to be accessible, so no need for advanced prompt engineering or advanced development workflows. But for those with more experience, we encourage experimenting with these same examples using Agentic workflows, with tools like Cursor, Codex, Deep Research, and others, where capabilities are evolving really fast.

A Quick Tour of AI for Asset Managers

Before diving deeper into Generative AI, we included a short but essential tour of machine learning techniques commonly used in finance, with topics like:

Supervised learning.

Unsupervised learning,

Reinforcement learning. For a recent post on reinforcement learning for portfolio optimization, check this: Deep Reinforcement Learning for Portfolio Optimization

Techniques to avoid overfitting and improve model selection

Practical aspects of data engineering, which are often just as important as the model itself.

If you are new to AI, this chapter provides the fundamentals. If you’re already familiar with machine learning, think of it as a refresher. It will help you to set the stage for understanding where GenAI fits into the bigger picture.

Part 2. Technical Foundations, Advanced Models, Real-World Deployment

Part 2 of the book dives into the fundamentals and technical details of GenAI, covering topics like:

How to generate time series synthetic data, along with different methods to evaluate the quality of the generated data

Forecasting applications

Alternative data processing

Sentiment analysis with LLMs

Techniques for efficient inference, including Parameter Efficient Fine-Tuning (PEFT), Knowledge distillation and Quantization.

We also cover several other important topics, including the famous scaling laws used to improve the performance of these models, the emerging abilities of LLMs, and how to scale these models for production environments, among many others.

Foundations and Generative Model Families

We start with Chapter 4, which provides an introduction to GenAI and highlights the key differences between discriminative and generative models.

Chapter 4 also touches on an important topic that rarely gets enough attention, how combining the power of discriminative and generative models can help better handle uncertainty in predictions, a topic especially relevant in finance. To get just a feeling about this, feel free to check out our video presentation here: GenAI & Automated Trading Summit | Algorithmic Trading on MT5 & TradingView

Another key point we explore is that generative models are not just about generating text or images. They are also powerful tools for representation learning, which is key for techniques like Retrieval Augmented Generation (RAG). If you’ve been following recent AI developments, you know RAG is everywhere, helping enrich the context of your input query making LLMs produce answers that are better grounded in external data.

Each of the major generative model families gets its own dedicated chapter:

Deep autoregressive models, with the famous Transformer as the main example

Variational Autoencoders (VAEs)

Flow models

Generative Adversarial Networks (GANs)

We explain how these models were originally developed and, more importantly, how to adapt them to the unique dynamics of financial time series, with practical, hands-on notebook examples using real financial data. For those who want more examples of how to use transformers in finance, they can visit our blog posts: A Poor Person's Transformer, Applying Transformers to Financial Time Series, and Cross-Attention for Cross-Asset Applications

Practical Applications, Efficient Inference, and Beyond

The final chapters focus on building end-to-end applications. For example, we demonstrate how to create a system that takes speech data as input and outputs trading signals. This system leverages powerful models, such as:

Transformers for sequence-to-sequence tasks, converting audio signals to text

LLMs for sentiment analysis, assigning sentiment scores to financial news or speeches

We apply these techniques to analyze the sentiment of Federal Reserve (Fed) press conference speeches, generating trading signals based on that sentiment. Below you can see the general pipeline of this system:

In this chapter, you will see how to:

Preprocess alternative data

Generate trading signals

Use LLMs for sentiment analysis in trading

If you want a preview of this before diving into the book, check out our video presentation: Trading Using LLM | Generative AI & Sentiment Analysis for Finance | Webinar.

We conclude Part 2 with the efficient inference topic. When it comes to real-world settings, you quickly run into practical constraints like cost and scalability for deploying these models, especially if you want to apply them for high-frequency trading. We show techniques and optimizations to help you improve the inference speed of these models.

Tools, Reproducibility, and Practical Focus

The book comes with code snippets, color-highlighted for easy reading, so you can see how to move from theory to working code. We’ve also included a full code repository of examples for each chapter, focusing as much as possible on reproducibility. Most of the examples have been carefully chosen not just for practicality, but also based on how well they convey key ideas, and their influence in the field (often measured by the number of citations). So, you might find code examples ranging from synthetic data generation, to forecasting with transformer models, or even building your own custom Finance LLM to answer finance-related questions by fine-tuning LLaMA 3.1 8B using QLoRA, among others.

We’ve tried to use tools and frameworks that are common in both industry and some well-known AI labs. That way, for example, if you want to experiment with building your own foundational models for time series, you can start from a familiar framework that allows you to easily benchmark your work with common datasets and metrics used in research papers in the field, and, just as importantly, test your ideas directly on financial data.

To the best of our knowledge, this was the first book, at the time of writing, to cover such a broad range of topics in the field of finance. We conclude the book by summarizing the main techniques discussed and emphasizing the critical role of domain expertise in designing meaningful trading strategies, particularly when using LLMs as copilots. We also discuss why GenAI, despite its success in other domains, may still require more empirical work to achieve similar results in finance. Finally, we highlight some promising research directions where GenAI could significantly impact the future of finance.

To follow our updates, here’s where we are:

Book Substack: gatambook.substack.com

Ernie’s X (Twitter) and Substack: x.com/echanQT, substack.com/@erniechan

Hamlet’s X (Twitter) and Substack: x.com/hamletjmedina, substack.com/@hamletmedina

Wednesday, June 18, 2025

Deep Reinforcement Learning for Portfolio Optimization

Is it really better than Predictnow.ai's Conditional Portfolio Optimization scheme?

We wrote a lot about transformers in the last three blog posts. Their sole purpose was for feature transformation / importance weighting. These transformed and attention-weighted features will be used as input to downstream applications. In this blog post, we will discuss one such application: portfolio optimization via deep reinforcement learning.

Read more here...

Friday, June 06, 2025

Cross-Attention for Cross-Asset Applications: How to mash up features from multiple assets

In the previous blog post, we saw how we can apply self-attention transformers to a matrix of time series features of a single stock. The output of that transformer is a transformed feature vector r of dimension 768 × 1. 768 is the result of 12 × 64: all the lagged features are concatenated / flattened into one vector. 12 is the number of lagged months, and 64 is the dimension of the embedding space for the 52 features we constructed.

What if we have a portfolio of many stocks whose returns we want to predict, or whose allocations we want to optimize? That’s where cross-attention transformers come in. The purpose of cross-attention is that a feature in an asset i may be relevant as a context to a feature of an asset j.

... Read more ...

Friday, May 23, 2025

Applying Transformers to Financial Time Series

In the previous blog post, we gave a very simple example of how traders can use self-attention transformers as a feature selection method: in this case, to select which previous returns of a stock to use for predictions or optimizations. To be precise, the transformer assigns weights on the different transformed features for downstream applications. In this post, we will discuss how traders can incorporate different feature series from this stock while adding a sense of time. The technique we discuss is based partly on Prof. Will Cong’s AlphaPortfolio paper.

Recall that in the simple example in a Poor Person’s Transformer, the input X is just a n-vector with previous returns X=[R(t), R(t-1), …, R(t-n+1)]T. Some of you fundamental analysts will complain “What about the fundamentals of a stock? Shouldn’t they be part of the input?” Sure they should! Let’s say, following AlphaPortfolio, we add B/M, EPS, …, all 51 fundamental variables of a company as input features. Furthermore, just as for the returns, we want to know the n previous snapshots of these variables. So we expand X from 1 to 52 columns (including the returns column). For concreteness, let’s say we use n=12 snapshots, captured at monthly intervals, and regard R(t) as the monthly return from t-1 to t. X is now a 12 × 52 matrix.

Friday, May 16, 2025

A Poor Person's Transformer: Transformer as a sample-specific feature selection method

For those of us who grew up before GenAI became a thing (e.g. Ernie), we often use tree-based algorithms for supervised learning. Trees work very well with heterogeneous and tabular feature sets, and by limiting the number of nodes or the depth of a branch, there is feature selection by default. With neural networks (NN), before deep learning comes around, it is quite common to perform feature selection using L1 regularization - i.e. adding a L1 penalty term to the objective function in order to encourage some of the network parameters to become zero. However, L1 regularizations are quite tedious when we have millions or billions of parameters in a deep neural network. In its place, transformers and attention become the go-to technique for feature selection in a deep neural network (see Chapter 5 of our book.) But beyond making feature selection practical for DNN, the attention mechanism provides one important benefit that is absent from traditional regularization or feature selection methods (such as MDA, SHAP, or LIME, see Chan & Man https://arxiv.org/abs/2005.12483): the selected features depend on each sample. They aren’t selected globally like traditional feature selection methods do. In other words, the features are selected based on their values themselves. In the language of transformers, we use self-attention for feature selection.

Transformers are usually illustrated with textual input. For e.g., a sentence containing 4 features (words/tokens) “I”, “am”, “a”, “student”. Let’s call this input feature vector X. In DNN, each feature may be a vector (e.g. we may use a d-dimensional vector to represent a word/token), as opposed to a scalar. So X may actually have dimension n × d, where n is the number of features (not the number of samples!) and d is the dimension of each feature. A financial application where this can be useful is when one feature (row) vector captures the daily return of a stock, its P/E, dividend yield, …, up d types of features, at a snapshot in time t. Another feature vector captures the same information at time t-1, and so on, up to a lookback of n. So if you have n lookback periods, the feature matrix has dimension n × d. But in many financial applications, each feature is just a real-valued scalar such as the daily return of a single stock. So X=[r(t), r(t-1), …, r(t-n+1)]T. This is the simple example we will use in our Poor Person’s version of transformer: d=1, and X is just a column vector with dimension n × 1.

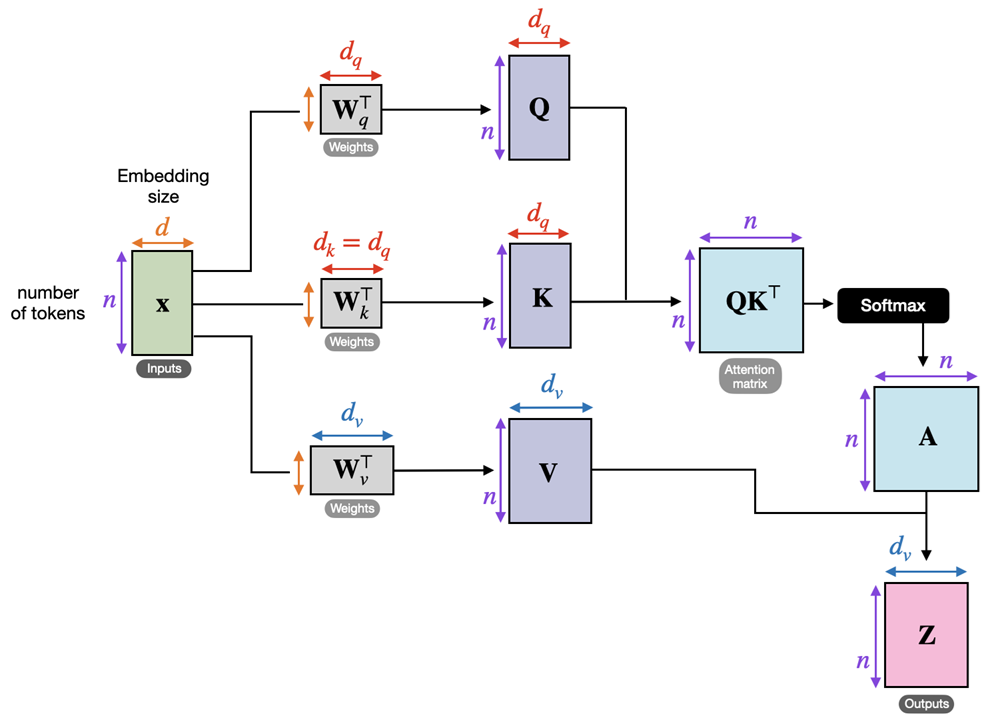

Now, in ordinary transformers, the next step is to transform X into 3 different vectors / matrices: Q (query, with width dq), K (key, with height dk), and V (value, with height dv). An element in Q is like “what this feature is looking for in other features that can provide as context”, an element in K is like “this is the context that this feature can provide, and element in V is like “this is a feature in a new representation”.

In a typical transformer with self-attention, for each input vector X, the Q, K and V values are calculated as linear transformation of X:

The WQ, WK, WV matrices themselves are learned parameters, learned based on the ultimate objective of this NN (e.g. classification, regression, or optimization), but the resulting attention score is computed as the function of the input sample X. The W’s all have heights n, but widths dq, dk, and dv respectively, though dq, dk are often set to be the same dimension. The intuition behind these Q, K, V is we want some linear mixtures of the original feature matrix X that best represent it, reminiscent of the familiar PCA. In the example of the n × d financial feature matrix we described above, we want to linearly project the return and fundamentals of a stock to some “principal component” vector, while preserving the distinctness of each lagged snapshot of these features since the projection is row-wise. I.e. Q, K, V have same height as X and so each row still represents a specific snapshot in time, as seen in the figure below which illustrates the building blocks of a transformer with self-attention.

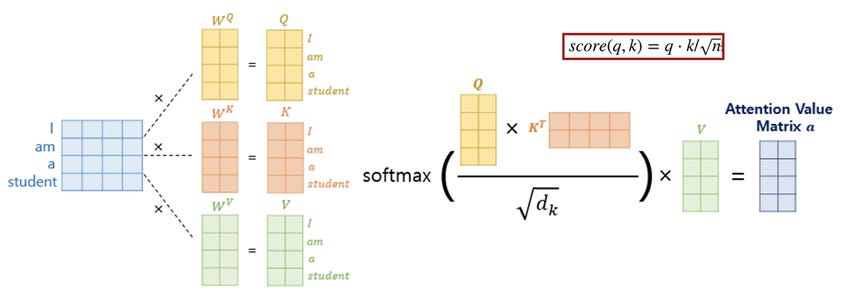

The figure below shows specifically a transformer with n=4, d=4, and dq=dk=dv=2. It shows also how the Q and K matrices are multiplied together, scaled by sqrt(dk) to prevent the magnitude from exploding, and fed through a softmax function to turn them into attention scores in [0, 1], in a process called “Scaled Dot-Product Attention” (for more details, see again Chapter 5 of our book).

Why sqrt(dk)? We will quote Cong et. al. “Assume that the components of q and k are independent random variables with mean 0 and variance 1. Then their dot product,

has mean 0 and variance dk. Why softmax? Softmax function normalizes the scaled dot-product into a matrix where each row is the normalized weights (i.e. they sum to 1) which are the attention weights applicable to the feature value matrix V. To wit,

But, in our Poor Person’s transformer, W is just a scalar, and Q, K, and V are all just 1-dimensional vectors. So we might as well eliminate this step and replace them all by the vector X. Note that this doesn’t collapse the matrix QKT into a scalar or vector. It is still a n × n matrix formed by XXT. Each feature i is still multiplying feature j to form the attention matrix element A(i, j). The elements of each row of A sums to 1 as in all attention matrices. If you ask “What is the feature importance score of feature j”, you can sum over all the values of column j, since column j represents the key feature j.

So if feature importance scores or feature selection are all you are after, we are done. But usually we are interested in downstream applications. In our 1 stock n-returns example, we might be interested in using these n daily returns, with proper feature weights, to predict the next day’s return. In this case, all we need to do it to multiply the attention matrix with V, which in our case is equal to X, to create the “context vector” Z=AV=AX. The context vector is an attention-weighted version of our original feature vector X. Downstream, we can use Z as input to a MLP for supervised learning, such as predicting the next day’s return, or for optimization via reinforcement learning.

Does this work? You can ask ChatGPT or some other favorite chatbot to create a program based on this blog post and try it out. Let us know how the results look in the comments!

P.S.

You may get excited by this feature selection method and think we should throw in a bunch of “heterogeneous” features such as volatility, P/E, earning yield, … of the stock to see if they work better. Unfortunately, the Poor Person’s self-attention method discussed above doesn’t work very well with features that cannot embedded in the same space. For example, it is nonsensical to add together A(i, j)=volatility * P/E and A(i+1, j)=dividend * P/E to form the feature importance score of P/E. To do that, we need to do some normalization and embedding. Also, maybe we want to tell the transformer that r(t), r(t-1), … is a time series and the features are time-ordered. All topics for the next blog post!

Saturday, May 10, 2025

Have LLMs improved over the last year? Comparing their responses to our books' prompts a year later.

The answer to this question may seem obvious if you read the breathless proclamations of AI luminaries, but good quantitative investors should be hype-immune. We want to carefully compare the ChatGPT’s unsatisfactory responses to a couple of the prompts outlined in our book (which are mostly generated in early 2024) to their responses now (April 2025). In addition, if ChatGPT’s response is still not satisfactory, we want to compare the responses of several popular LLMs, some of which weren’t even available in early 2024 or weren’t launched in production mode yet. Of course, to test all the LLMs (e.g. ChatGPT, Copilot, DeepSeek, Gemini, Grok, Perplexity, …) is very time consuming, so we also use Chatbot Arena to randomly pick 2 for each prompt (see https://www.bloomberg.com/news/articles/2025-02-18/before-deepseek-blew-up-one-website-announced-its-arrival).

Let’s try the first and very simple task: plotting annualized returns of an ETF. Shockingly, ChatGPT failed at this task previously, as you could read from our book. It committed the egregious error of annualizing returns by multiplying by sqrt(252).

User Prompt

Write a Python program to download the adjusted closing prices of the ETF BILL, and plot its annualized daily returns chart. We only need YYYY as x-axis label.Unfortunately, many LLMs (such as ChatGPT, Perplexity, gemma-3-4b-it by Google, command-a-03-2025 by Cohere) misinterpreted the question and produced some complicated calculations for the rolling 252-day compound returns. The only chatbot that correctly and simply multiplied the daily returns by 252 and displayed that is Microsoft’s Copilot. Bravo Mr. Nadella!

Now let’s proceed to another task that ChatGPT failed miserably before: summarizing a trading strategy based on Simon and Campasano (2014).

User Prompt

Pretend you are a quantitative finance expert. Please summarize the trading strategies described in this paper. [Author Note: We attach the pdf.]ChatGPT Response

Absolutely—here’s a summary of the trading strategies described in the paper "The VIX Futures Basis: Evidence and Trading Strategies" by David P. Simon and Jim Campasano:

🧠 Core Insight:

The VIX futures basis—the difference between VIX futures prices and the VIX spot index—does not predict changes in the VIX spot index, but does predict changes in VIX futures prices. This creates trading opportunities due to the convergence of futures prices to spot at expiry.

📊 Main Strategy:

Exploit the "Roll" in the VIX Futures Curve

Short VIX futures when the curve is in contango (futures > spot) beyond a threshold.

Buy VIX futures when the curve is in backwardation (futures < spot) beyond a threshold.

These trades take advantage of the fact that VIX futures converge toward the spot VIX price as they approach settlement.

🔧 Implementation Details:

Daily Roll Signal: The basis is divided by the number of business days to settlement.

Enter short if daily roll > $100

Enter long if daily roll < –$100

Exit criteria:

Daily roll falls below $50 (short) or rises above –$50 (long), or

Maximum holding period of 9 business days

⚖️ Hedging Equity Risk:

Because VIX futures prices move inversely to equity markets, trades are hedged using mini-S&P 500 futures.

Short VIX = Short S&P hedge

Long VIX = Long S&P hedge

Hedge ratios are computed using regressions of VIX futures price changes on S&P futures returns, updated daily out-of-sample.

💵 Profitability Summary (2007–2011):

Strategy Mean P&L Sortino Ratio Notes Short VIX (hedged) $792 1.26 62 trades, 2:1 win/loss Long VIX (hedged) $1,018 1.03 40 trades Unhedged versions Slightly higher P&L, but significantly higher downside risk

Transaction costs included full bid-ask spread + brokerage fees.

Strategies showed robust performance even out-of-sample and across sub-periods (pre/post-2009).

🧾 Key Takeaway:

The profitability is mainly driven by the roll yield (the premium or discount in futures relative to spot), and much of the risk can be hedged using equity index futures, enabling statistically significant and economically meaningful returns.

Let me know if you’d like this turned into a code simulation, visual walkthrough, or executive-style slide!

This is a much better response than the one recorded in our book. We truly can’t ask for a better answer. Let’s take ChatGPT up on its offer and turn it into code. The results can found here: https://chatgpt.com/canvas/shared/680a8810a5808191aaa35e4b31d0a813. Looks great, doesn’t it? What we don’t know, however, is whether ChatGPT has used our previous prompt as part of its training data for this version, so this isn’t a completely fair benchmark.

TL;DR

Yes, the answers got much better over the last year. But still not all LLMs give equally satisfactory answers - sometimes one to try quite a few to find the suitable one. We suggest you try out other prompts in our book and see if the answers improved!